EEG-based Emotion-Aware Music Recommendation System

- 3 minsIntro

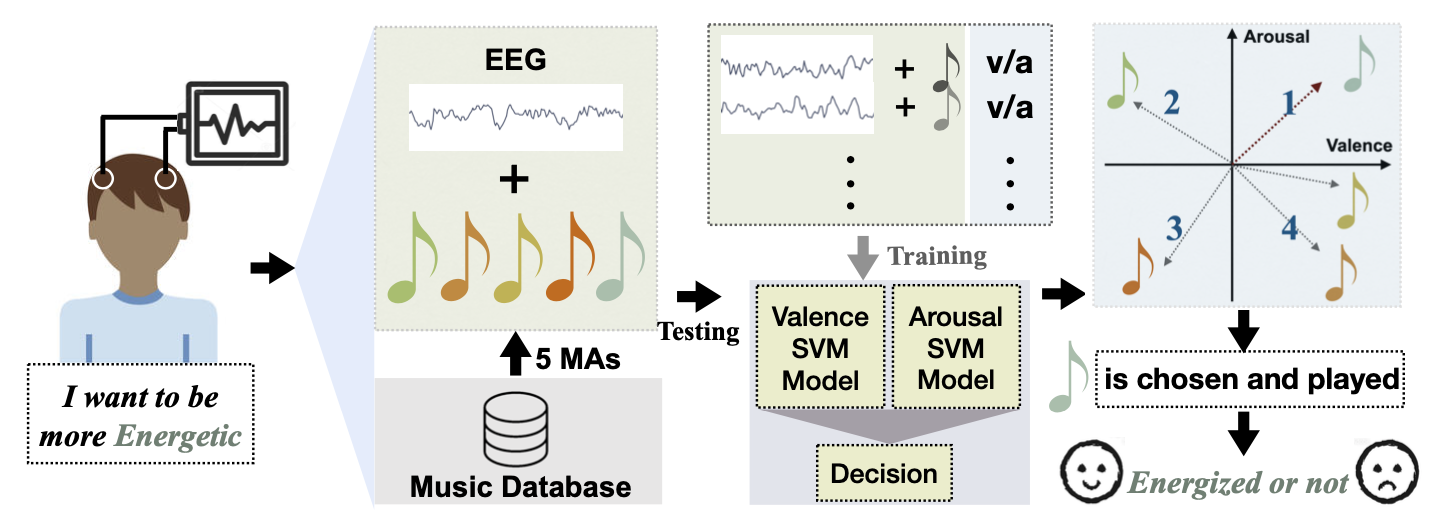

BEAMERS (Brain-Engaged, Active Music-based Emotion Regulation System), is a real-time customized music-based emotion regulation system, which utilizes EEG information and music features to predict users’ emotion variation in the valence-arousal model before recommending music.

the system supports different emotional states to help users regulate their mood with adaptable and controllable music recommendation system. BEAMERS achieved an accuracy of over 85% with 2-seconds EEG data.

Motivaiton

Given dramatic changes in lifestyles in modern society, millions of people nowadays are affected by anxiety, depression, exhaustion, and other emotional problems. The demand for proper emotional care is accumulating and accelerating at an increasingly rapid rate. Music, as was proved to be highly effective and accessible to evoke emotions and influence moods, has been increasingly adopted as a powerful tool in Mental Fitness Applications. However, different people has diverse taste and sensation towards a music piece, and even for the same person, when exposed to unstable mental status, he/she may experience different feelings towards a same song. Adaptable and customized system considering the users’ current and future mental status should make a difference.

EEG has become the dominant modality for studying brain activities, including emotion recognition in human-computer interactions (HCI) studies. A large number of studies have investigated perception and recognition of people’s emotions based on EEG signals, while, a bigger ambition should be exploring the approach to safely and effectively effect and improve people’s mental ability with EEG processing/analysis involved in the close-loop system. This is where BEAMERS comes into play.

What was done?

- A novel music-based emotion regulation system was designed without employing deterministic emotion recognition models for daily usage.

- A prototype was developed with a commercial EEG device.

- The system supports different emotion regulation styles with users’ designation of desired emotion variation, and achieves an accuracy of over 0.85 with 2-seconds EEG data.

- The users’ emotion instability level was calculated by the system based-on users’ variant emotions towards the same song. The results were verified with Big Five Personality Test.

- User experience study was conducted based-on questionnaires, in which people reported high acceptability (83.1%) and usability (82.2% satisfaction) of such delicate music recommendation system for emotion regulation purpose.

How it works?

For training data collection, the users were asked to listen to music while wearing a EEG headset. After each song, the users were instructed to assess their emotion variations induced by the song with valence/arousal (v/a) scores (on Russell’s circumplex model). The EEG headset would record the brain waves durig the process.

In the real-life application scenarios, with a EEG headset (or a portable device with as few as 2 electrodes) worn, the users simply provid a desired emotional state (calm/energetic) and a playlist would be automatically generated. Evaluations would be made periodically for timely feedback and adjustment.

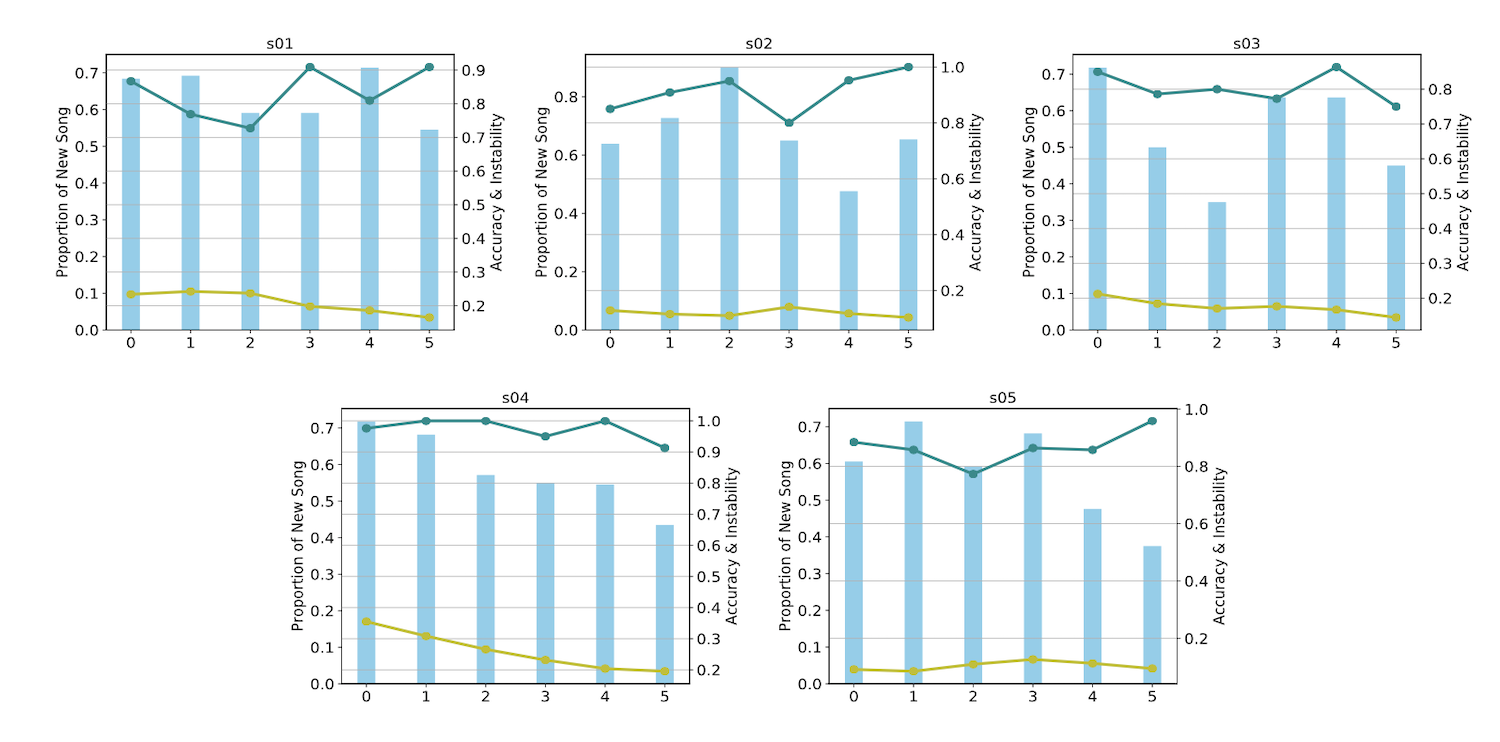

The testing results of the real-time system in a real-life scenario is shown below.

What is highlighted?

- Our approach bridges the gap between the theoretical studies and the practical and usable interactive systems for daily usage by proposing a dynamic emotion variation model, instead of using the conventional definitive emotion recognition methods.

- Based on the qualitative prediction of emotion variations, our system is able to recommend proper songs, being optimized towards both users’ listening preferences and their desired change of emotion.

- The user’s different emotion variations resulting from one song can be distinguished by the system, which implies that it could be utilized as an indicator for mental health related applications.